Advent of Code 2023: Let it snow

For the ninth December in a row, I’m playing with Advent of Code. Advent of Code is a series of 50 puzzles published by Eric Wastl, where you try to solve Christmas from some far-fetched horror. Every day from December 1st to December 25th, two puzzles become available, but the second is revealed only after you provide the answer to the first. In this post I will go over how you can solve them, and hopefully some interesting concepts along the way.

In this year’s story, there’s a problem with the amount of snow around the world1 which (while a problem you’ve solved before) requires drastic measures such as strapping you into a trebuchet and sending you into the sky, and into a set of puzzles.

As a bonus challenge, I tried to make the program that solves all of these problems run in less than the time a Python interpreter to start up and do nothing. On my questionable laptop, this takes 37 milliseconds. I did not succeed in this; after day 16 the budget was more than spent, and I gave up on hyper-optimizing. I’ll include a few tips that I used below for reference.

The puzzles

Day 6: Joe Speedboat

You’re given a few toy boats, where you can press a button to let them gain speed, and when you release the button the boat travels at that speed proportional to how long you held the button, which needs to cover some known distance within a given time . In other words, we’re looking for all the values .

The values given are disappointingly small so you can just brute force all possible , there’s room to be smart here. The solution that I should’ve thought of first is to realize that is a simple quadratic equation that you can just solve using the quadratic formula. This is also the fastest way I know to do this, and I’ve revised my initial solution to reflect this. However, I’ve been doing too much Leetcode lately and I’m not thinking of maths, I’m thinking of algorithmic tricks.

This is a problem that can also be solved using binary search to find the minimum value that goes further than . You can trivially prove that the distance covered is maximized when you spend half the allotted time holding the button, and use that as your upper bound. You can set your lower bound at 0 or 1, whichever you prefer.

Using these bounds, you can check in the middle between the two bounds. If that speed makes it further than , it is your new upper bound. On the other hand, if it doesn’t, your lower bound is the middle you tried, plus one, since you know the middle doesn’t work. You can repeat this until the lower bound and the upper bound are equal.

What surprised me is that for sufficiently small numbers, as present in part 1 of the puzzle, this algorithm is very nearly as fast as solving the quadratic equation directly. My only guess is that the square root needs to do a couple of rounds of the Newton-Raphson-method, or whatever estimate my CPU is using to compute roots. For larger numbers, the quadratic formula still decisively wins.

Day 8: Spooky scary camel paths

In today’s puzzle, we are stranded in a desert, and you have to follow a set of instructions telling you to go left or right, along a map that tells you where you end up as you go left or right at a given node. For the first part, there is no actual pathfinding involved; you only need to follow the instructions and accept that the map doesn’t make sense in Euclidean space. The only “smart” thing I managed to do here was to interpret all node names as base 26 numbers, alleviating the need for expensive hash maps. More on that later.

For the second part, our problem gets more interesting. We don’t start at node AAA, we start

simultaneously at all nodes that end in A, and we’re only done once all of our parallel realities

arrive at a node that ends in Z at the same time. Of course, you can try to simulate it all, but

for my input, it requires steps, which with my implementation takes

approximately forever.

Our only way forward then must be to find a pattern of when the camels arrive at “end” nodes, then use the Chinese Remainder Theorem2 to figure out the period of the whole cycle and determine when things align. There are various ways in which this can be made harder; there could be several end nodes on every cycle, which would blow the problem up.

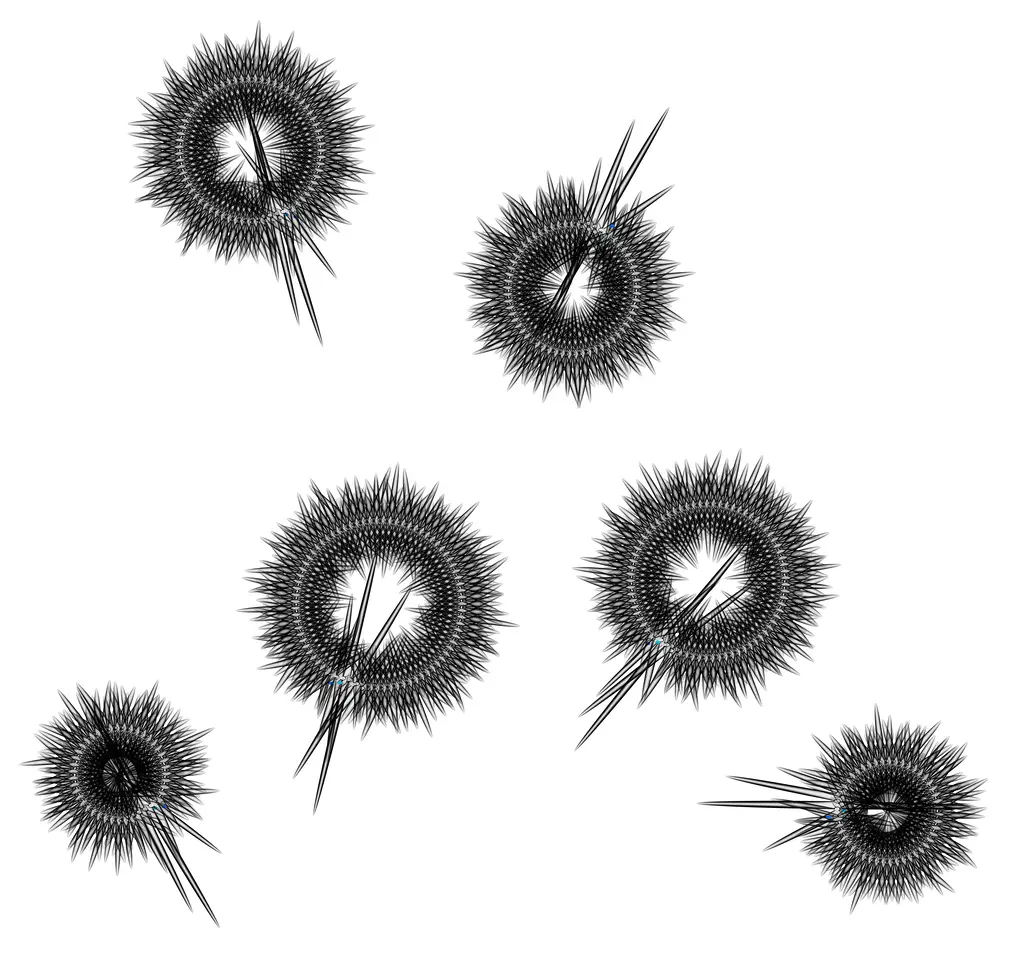

Paths that the ghosts travel. There are multiple edges between the same nodes, as it matters for the cycle to exist where in the left/right instructions list you are. The vector version of this image can be found as a 19MB SVG on Github Gist as it’s too large to be on this blog.

It turns out the input is not only not evil, it’s kind. There is only one end node in every loop, and the period of each loop is equal to the offset (the time it takes to reach the first end state). Because of that, our final answer is simply the lowest common multiple of all individual offsets. This is hard to see in the visualizations, but you can already see that for the most part, the path is a line around a circle without random jumps.

We can simplify the graph above a bit by removing duplicate edges. This reveals the paths for what they truly are: two circles, where you “randomly” switch between the inner and outer circles. At the end of one of the circles is your exit node, and then you start right back in, roughly at the same place your starting node put you. You can see that in the insert below.

Highlight of the cycle starting in JVA and ending in CJZ. Note how JVA joins the loop

immediately after CJZ, and also how the branching differs before and after the end node. Before

CJZ, the branching only goes away from the path with an end node, whereas afterwards, it is once

again strongly interconnected

In the making of these visualizations, I managed to crash both Graphviz and Imagemagick, and I figured out that Graphviz does in fact have a line length limit. Due to that, making this explanation turned out to be harder than solving the actual problem. Oh well.

Day 14: Rolling stones

Today we are simulating a bunch of rolling rocks lying on top of a mirror. We tilt the mirror in one

of the cardinal directions and then observe the rolling rocks O slide into either the edges or

into the stationary rocks #.

The first part of the puzzle verifies that you’ve understood the mechanics of the rolling boulders, and then the second part asks you to do this a ridiculous number of times. Luckily, at some point, the system will start to repeat a pattern and you can take a shortcut. You can use any cycle-finding algorithm to do so (I prefer a modified version of Brent’s Algorithm) to find this shortcut, and then simulate a much smaller number of iterations.

For me, this was the obvious solution, but I’ve been spoiled by 9 years of Advent of Code and other competitive programming interests. I was not going to write about this puzzle in detail, but a colleague who is not as familiar with all that asked me how he was supposed to know that it would repeat, and I didn’t have a clear answer for that. It feels like it should repeat, and even rather quickly, and while I cannot pinpoint why, I can show my clues:

- The number of rolling rocks doesn’t change

- The rocks will (after one cycle) always end up somewhere close to the bottom-left corner

- There is a limited amount of space to put the boulders there, and therefore a limited number of configurations.

That same colleague asked me where that intuition came from. For me, it came from a childhood toy. The very same toy that caused me to fear the second part today after I was reading part one. Instead of “what happens when you shake this thing a bunch of times” which is what we got, I feared the question: how do you have to shake the plate to end up with all the stones in one particular corner? Luckily it wasn’t that, and the toy below was not necessary to solve today’s puzzle.

Childhood toys turned into programmers’ nightmares. Special thanks to Pixabay user Alexas_photos for uploading the only royalty-free image of this thing I could find. I hope your animal protection work is going well.

Day 24: Linear Algebra 101

Welcome to the lecture, please take a seat. We are inside a hail storm, and we want to know if and where the hailstones collide. Every hailstone is defined by its three-dimensional current position and velocity vectors.

Part one

For the first half of the problem, we want to know which hailstones collide with each other in just the first two dimensions, and whether that happens in some limited bounding box. The first idea I came up with was to start with . Since we’re talking about two dimensions, this gives us the following system of linear equations:

This is a linear system of two equations with two unknowns, so this has either zero solutions or one solution3 in most meaningful cases, or infinitely many if the initial position and velocities happen to be equal. You can solve this with various techniques and I tried implementing the substitution method as it allows you to work in integers without losing precision. Unfortunately, this is hard and I got demotivated.

Luckily the puzzle is not as I’d feared, and our bounding is small enough to fit within the contiguous integer region of a double-precision float4 so you can solve the problem with them just fine. This allows you to rewrite the equations into the form of by computing the slope5 from the velocity vector and using this instead. When we set these equal as in the equation below, we only have one variable, which is trivial to solve. You can then work backwards to discover whether and are indeed in the future.

Part two

Unfortunately, the second half is not nearly as easy. None of the hailstones seem to collide when looking at all three dimensions. Instead, we’ll throw a rock into the storm at some known speed and make it hit every hailstone. This already gives us six unknowns: , , and for both our velocity and starting position.

At our disposal is a rather infinite supply of equations: each individual hailstone. But we only need three. This gives us three additional unknowns (the time of impact for each of the stones) but also nine equations, one for every dimension of every hailstone.

This number makes intuitive sense as well for me. When you consider only 1 hailstone, you can hit it from basically any direction at any speed. With two hailstones, there are still a lot of options that work out, and they form a kind of plane. This still makes sense, since you have two unbound variables in this case, which do indeed form a plane. Only with three hailstones, there is one path that uniquely intersects all of them. You can of course add more hailstones, but they will only complicate your system of equations.

It turns out you don’t need nine equations, you can make it work with six. Remember, we’re solving the following equations:

That last step may seem weird (it gets rid of the time factor) but it’s actually a property of the cross product . Two parallel vectors have a cross product of zero and since the vector is equal to the vector , it is also by definition parallel to it, and a scalar multiplier such as can be divided out without changing the direction. This is a bilinear equation in both and , which you can then set equal between different pairs of hailstones and solve it.

Anyway, since solving large systems of equations math is not my forte I enlisted the help of a

general linear algebra crate, ndarray. By itself, it cannot solve

unfortunately, so I had to add a separate crate that can do it. The supported way appears to be

ndarray-linalg which wraps the classical Fortran lapack library. I briefly tried to get that to

work, but I couldn’t get it to pick up on the openblas libraries in my system, and then it wanted

to compile them itself. I instead found the linfa-linalg crate that offers the

same functionality in pure rust. It doesn’t appear very maintained, but I can attest that it works

perfectly fine. That just leaves the equation to solve. I put it below.

Other days

Not every puzzle has a lot of detail to it, but I still want to share my notes for them as they might be of use to someone else. In order:

-

Day 1 I used the

aho-corasickcrate to detect overlapping matches, as regular regex tends not to find overlapping matches. You need overlapping matches to correctly identify the value offiveightas 58 rather than 55. This is an optimal solution on paper, but a colleague of mine showed that since the words we’re searching for are relatively slow, just hardcoding the matching code from the beginning or end is even faster. -

Day 2: You can ignore the individual grabs of marbles and just look at the largest number of marbles present for any particular colour. To my surprise, this still worked for part two.

-

Day 3: Part 1 was relatively straightforward, for part 2 the key insight is that you want to store the values that are connected to cogs per cog. Also small assumption that the numbers are only connected to a single cog, but this happens to be true for my input.

-

Day 4: One observation: lottery tickets are integers below 100 (since they’re at most two digits) so you can represent the tickets in a set as bits in a 128-bit integer. This makes determining winners a simple bitwise AND operation.

-

Day 5: All mappings are the same, so you can just make them the same. I initially thought of using a BTree for look-ups per section, but a sorted array has the same time complexity and better cache locality.

For part two, Rust happens to be fast enough that my part 1 solution could solve it by brute force in under a minute, but by transforming entire ranges of seeds at once you can speed it up considerably.

-

Day 7: Fun puzzle, only one snag. You should read the text well. I completely missed that the J moves down in the priority order of the second part, which cost me an unreasonable amount of time.

-

Day 9: This was not too difficult, but it was fun to think about how to implement this efficiently. For me the, crucial observation was that you are computing the backwards diagonals as shown in the examples. Then after finishing the puzzle, I realized that you don’t have to special-case the initial values and that if you treat those as derivatives as well the whole system still works.

-

Day 10: Part one is not interesting, but for part two I learned something new about geometry. You can use a kind of ray-casting to count the number of times you cross an edge of a polygon, and if that’s odd, you’re on the inside. I’ve seen this called the crossing lines theorem but I can no longer find the sources where I got it from and now I wonder whether it was all a dream.

-

Day 11: Classic Advent of Code puzzle. The first half shows you a simple problem, and the second verifies you didn’t pick the hardest way of solving it.

-

Day 12: “Hot Springs” referring to actual metal springs is quite possibly the best pun in the event’s history. Eric, if you’re reading this, it’s okay that you’re not sorry. I’m proud. Also, the problem is a good introduction to Dynamic Programming, should you need one.

-

Day 15: The puzzle was designed to teach you about hash maps, and then make you use them. The only tricky part is that for part 2 you need to remember the insertion order of the items. In Python, the

dictalready does that, in other languages, you need to opt into it. The data structure you’re looking for is called the linked hash map. It combines the traditional hash map with a linked list going through the items, which maintains the insertion order. -

Day 16: Just do it, still looking for a way to be smarter than “just try every individual position.”

-

Day 17: Dijkstra and A* are still interesting algorithms. No other work is required.

-

Day 18: Carrying on from day 10, we’re looking for the area of a polygon. The crossing lines theorem I used back then might still work, but there’s also the shoelace formula, which elegantly computes the area as long as you know the coordinates of the corners.

-

Day 20: The start of the final stretch, and for me the first truly hard challenge. I didn’t like that part twp requires a deeper investigation of the network. I always feel coding specifically for my input instead of for the problem is doing it “wrong.” I made a visualisation to see whether the structure would make more sense. It does not.

A diagram of the signal nodes and connections in day 20. Conjunctions are shown as red triangles, and flip-flips are shown as light-blue boxes.

-

Day 21: Second unreasonably hard puzzle. In the first half, you learn the magic of parity. Because you cannot move diagonally, and therefore cannot waste moves, you can only ever reach squares that are 1. reachable in fewer than 64 steps, and 2. have an even distance.

Part two, I don’t quite know. For reasons that are caused by the shape of the real input but not the sample input, there’s a repeating pattern. The number of garden plots you can reach whenever you reach the edge of a map tile (after 65 steps, and then every 131 steps) fits a quadratic expression. You can simulate until you reach the first three, and then extrapolate from there. Conveniently, the number of steps you have to simulate is .

-

Day 22: Still a lot of work, but in the end, it was a fun, messy simulation. Nothing is fundamentally hard about it. You can do a topological sort to avoid a repeated simulation in part two, but not doing it is still reasonably fast.

-

Day 23: It took me embarrassingly long to figure out my graph simplification code. The main trick I went with in the end is to distinguish between “junction I need to do something with” and “path I need to do something with.”

The longest path problem is fundamentally NP-hard, though the slopes remove any potential cycles in part 1. For part 2, this is no longer true, and you get to brute force all of the potential routes. Takes a while, even in Rust, but it’s not hard assuming you programmed part 1 right.

-

Day 25: Finally, I have relevant expertise. The puzzle tries to teach you about the Minimum cut problem, and once upon a time, I wrote a thesis around the related maximum flow problem. The minimum cut is the minimum amount of (weighted) connections that need to be cut to be disjoint. In this case, we’re not looking for that, since we’re given the answer: three. Instead, we’re interested in where to cut.

I tried to implement Karger’s algorithm but I failed to correctly detect the cases where it doesn’t find the cut correctly. Instead, I opted to repeatedly find the shortest path between two random nodes, and count how often each edge is used. The most used edges are maybe probably the right ones.

And that’s all the notes I had on the puzzles.

Performance tricks

During the first half of the event, I worked on over-optimizing my puzzle solutions in hopes of solving all puzzles in less than the time it takes for a Python interpreter to start up. I got the idea from a blog post I read a few years ago but I’m not able to find any more. Nevertheless, here are what few tips I have based on my experience trying to optimize my runtime.

-

Don’t use hashmaps if you can avoid it. While your access is , your constant factor is important, and Rust’s hash function is somewhat inefficient by design, for security reasons. Many times, if you can use a large array and map your keys to integers yourself, you should.

-

Avoid allocations. Try to reuse heap-allocated collections by passing buffers to inner functions. You also want to avoid nested collections, as you’ll easily start getting bottlenecked on the allocator. On Day 9 for example, I managed to reduce the number of lists in my input to two, by storing all of the numbers in one list and then in a second list store ranges of indices that belong to each line. Admittedly, you could implement this puzzle with streaming which might even be faster.

-

Avoid large types. Rust written naturally has a bunch of “moves”, where the compiler has to effectively

memcopya variable from one place to another. In most cases, the optimizer can make this efficient by constructing things in their final location, but this does not always happen. To help, you can instead use indirect storage. For example, instead of an[u8; 1024], you can instead use aBox<[u8]>, or even aBox<[u8; 1024]>, but do note that constructing the latter will sometimes run into stack overflow issues. -

Be mindful of your iteration order. DFS is generally better than BFS by a surprising margin, even with an explicit stack. The improved locality of your data access is surprisingly important for your cache hit rate. The same goes for iterating over grids; if you can mostly read the grid in the order that it is stored in memory, it will likely be faster.

All of these tricks combined helped me achieve a total runtime of slightly under 0.4 seconds. Much worse than I’d originally set out to, but I’m pretty content with the result.

Cumulative time spent by the solution program every day. Notice how the last few days take up most of the run time. Target time is from a previous year’s attempts to finish in under 0.5 seconds.

Individual time spent on each part. Notice the scale is logarithmic.

In conclusion

This year’s edition of Advent of Code was pretty hard. According to the subjective opinion of some guy on Reddit, it is the second hardest edition so far. To me, it was the first year that I truly got burned out near the end.

The last five days required a lot of work to solve, either because programming them was somewhat tedious, complicated math was involved, or because you shouldn’t try to solve the generic problem and should look at your input. They were not fundamentally wrong, but the lack of rest days in between was a bit much. The Reddit threads also show a pattern of “I just threw Z3 at it and it solved it” which is the opposite of fun to me.

I know I’m doing the puzzles wrong by trying to over-optimize on time, but even with that in mind, I don’t think this was particularly fun. You might also notice that I didn’t have any fun and elegant algorithms to talk about in this review.

Should there be a next edition6 I’ll probably still participate, but in a language that is more suitable for bodging together a quick and dirty solution. Python is always nice, though if I find something new along the way that’s also cool.

Let me not discourage you; Advent of Code was still fun. It’s kept me working hard throughout the month, and finishing my 450th star was still satisfying.

Happy New Year, and let’s meet back up for the puzzles in December.

-

A rather realistic story, at least in the Netherlands. ↩︎

-

As long as the puzzles keep using it, I’m going to keep mentioning it, mostly because it’s one of the few mathsy things I know. ↩︎

-

This is a theorem that I think has a name, but I don’t know any more. Please send me an email and I’ll correct this. ↩︎

-

Floating points are roughly equivalent to scientific notation in base two, written as where the mantissa and the exponent . While the finer details are irrelevant, the important bit is that, with both and fixed-width integers, there’s a limited range of values you can represent exactly. For double-precision floats, the largest integer up until where every single integer is representable exactly happens to be 9,007,199,254,740,992, or roughly 22 times the upper limit of our bounding box. ↩︎

-

It is of course possible for the slope to be undefined, whenever a hailstone has an velocity of . This does not happen in the input, but if it did, it even simplifies the equations, as suddenly you know the coordinate of the collision already. It’s where you started. ↩︎

-

Why ever stop at nine when you can stop at ten? ↩︎